I have to admit the current AI hype train is causing me some cognitive dissonance. The Council of Jukesies yapping away in my head are raising their voices as we try to decide just what the hell we think about it all.

Then I saw this post on Bluesky and it kind of summed things up for me – as much as anything (for me) it is a branding issue.

There are a whole host of things happening at the moment described as “AI” (imagine sarcastic finger quotes) that just a few years ago would be described as ‘machine learning’ or ‘data science’ activity. It is mainly unglamorous, behind the scenes, complex, boring magic. It doesn’t get headlines and is never going to be consumer facing. It is probably not going to make anyone a billionaire but it is useful and helpful now.

Things like what James wrote about here;

Though of course whether Councils can do anything about all those identified current and potential potholes is a different question!

Then there things being built – or rather prototyped – by people to create little apps or services to fix small problems or investigate issues personal to them – too niche or specialised to attract the attention of the wider tech community. More than hobbyists but not by much…the sort of things we could build in the past when the web was open;

The GenAI/LLM stuff really confounds me. I find some of it fun and interesting and can absolutely see there is potential for it to be important…but it feels like it is nowhere near ready for primetime and the half the world has massively jumped the gun and are turning a blind eye to the fact that companies are basically releasing experimental alphas into the wild (not even beta) and pretending they are products that can be used for actual real things. It feels like some kind of shared global delusion!

Also I don’t want “AI” to be creative. I want it to do the boring shit, the things that free me up to do the fun things. We need to protect authors, artists, musicians etc – not sell them out for a future of slop everywhere. Of this I am sure…even given my history of supporting open licensing etc.

Steve shared this great slide yesterday which I think I’ll be using a lot in the future – a reminder to me (again) that this is all applied statistics and probability stuff – but no VC is funding anything described like that are they 😉

Anyway I don’t think I am alone in struggling with all this a bit. Whereas with things like Blockchain and the Metaverse where I leaned into my naysayer mode early and confidently I find this all more nuanced and the hype doesn’t help (some of the Government coverage around the AI Opportunities Action Plan felt mindblowing in its naivety!)

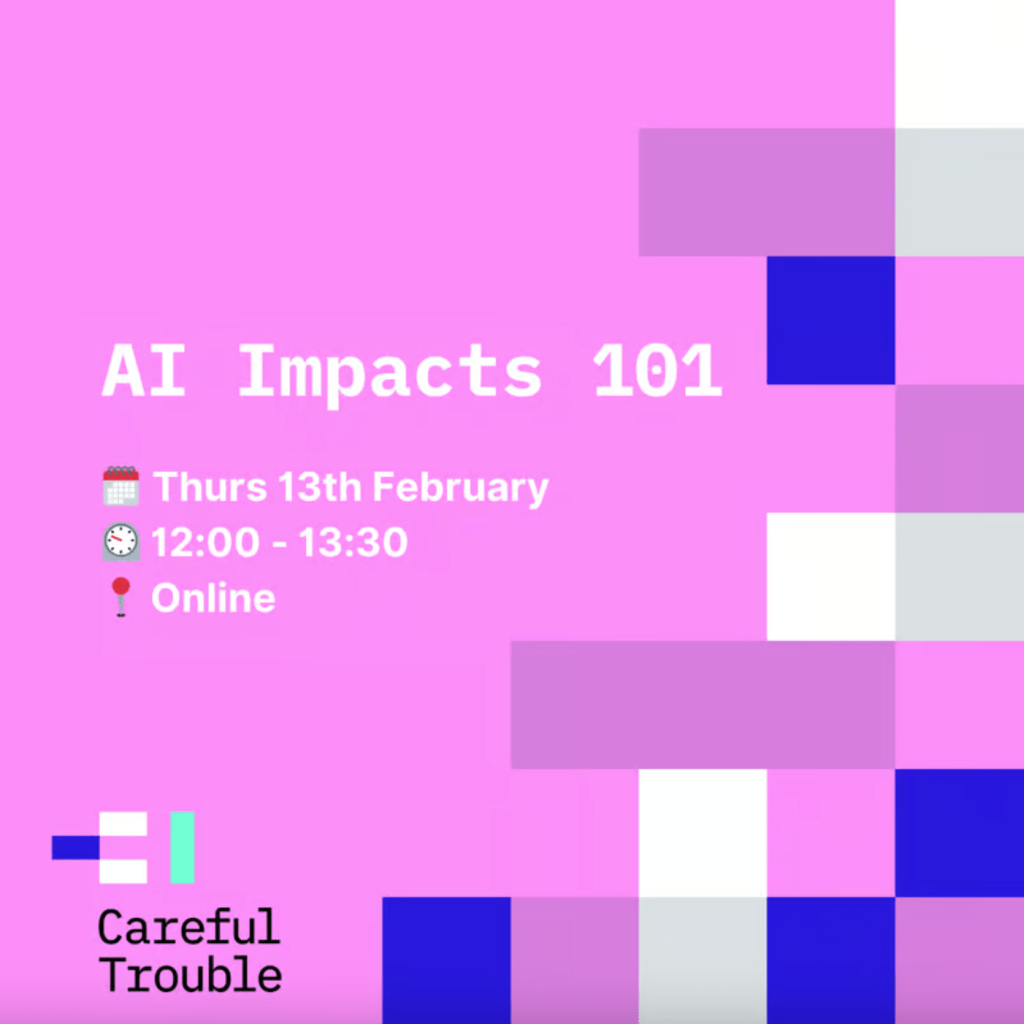

I saw that Rachel Coldicutt is launching an AI 101 course and given how much I learned from her take on it over the last couple of years (though apparently she was ‘dunking on AI for clout’!) I think I can safely recommend it if you have similar confused thoughts to me.

Oh well – these days everyday is a school day – lets see what lesson Bluesky will teach me today on this topic!

3 responses to “Internal AI imbroglio”

I’m right there with you. Here in the U.S. there’s a belief that we’re “way ahead” with AI tech and need to keep that leadership position globally. Hence the fanfare about $500B being spent by OpenAI, Oracle, and others to build countless data centers, not to mention executive orders from Biden (2023) and Trump (2025) to ramp up

government work and investment in this sector.

But it feels like we’re leading a charge into environmental collapse, employment collapse, and cultural homogenization, all to win an imaginary war with, well… China, maybe?

I have used LLM tools myself, and I’ve figured out what they do (statistically-predictive language), which is kinda useful here and there. And they are adding some scripting around the edges so you can string language and commands together in ways that feel almost natural, or at least novel.

But none of this is “intelligence” in any meaningful sense of the word.

So I’m watching and experimenting, but not committing to any notable changes. We have a lot to figure out yet. Like how to get power demands down, to reduce the environmental damage and contain costs.

Something will come of this (unlike blockchain, the metaverse, NFTs, or Web 3.0). I just don’t know what.

[…] https://digitalbydefault.com/2025/01/25/internal-ai-imbroglio […]

[…] Matt Jukes wrote here about his internal AI Natalie Imbruglia imbroglio – like many of us he’s torn, cold and shamed, lying naked on the floor. […]